John Goodwin - Builder/Solver

Speaking with a friend of mine on FreeNode.net, he had pointed out an interesting apparent performance bottleneck.

His server while quite old (x232, 8668-54X) had three 18GB 10k RPM drives.

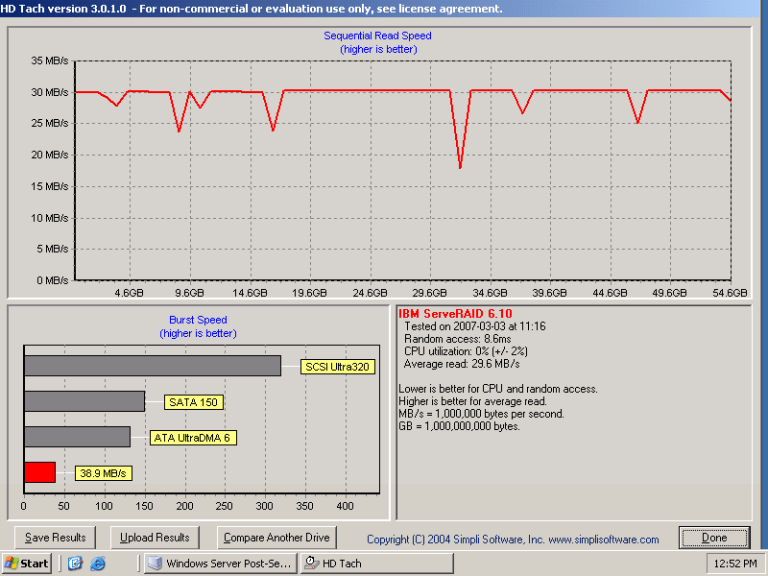

He sent me the following picture which the performance metric seemed out of whack for several reasons.

Am I reading that right? 29.6 MB/sec average read ? My external USB can do that on a parallel ATA 7200 RPM drive. This was THREE 10k RPM SCSI drives in RAID 0.

Another point which caught my eye was the fact that there was no decay in the the performance. Most drives decay in performance as you reach the center.

So, I had him try a larger stripe size. The one above is 8KB stripe, which is a bit small.

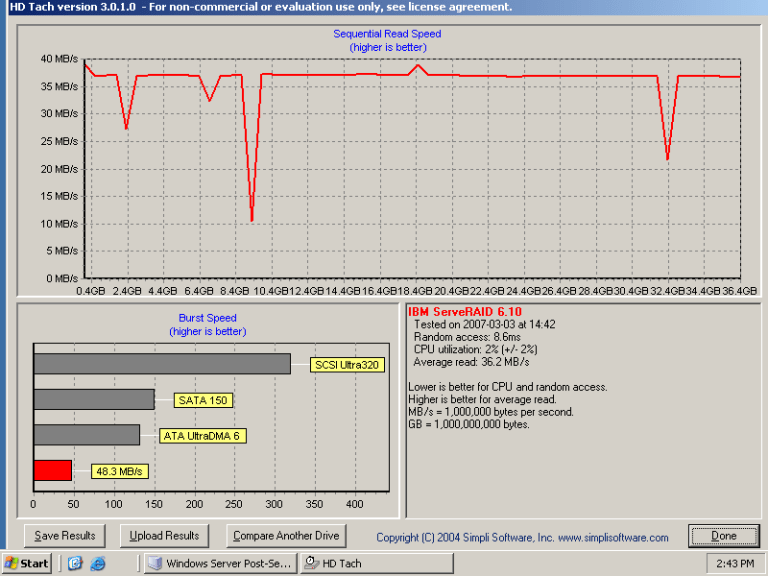

RAID 0 with 64KB stripe looks like this:

About 9MB/sec gains, but still a LOT less than I would expect. My RAID 0 with 2x320GB 7200 SATA seagates gets around 95MB/sec.

Also, I noticed there’s still no decay in performance.

So, for kicks, I asked him if he wouldn’t mind trying RAID 5 with 64KB stripe size.

Read ended up 2.5MB/sec slower, and still no decay in the performance over the entire partition.

This is really odd. It looks like there’s some sort of bottleneck we may not know about, but considering he has the latest firmware for his card, and latest drivers you would expect to see something much higher.

If anyone has any thoughts, please post some comments.

Update 2007-03-10 09:44AM EST

My friend sent me the following screen shot to verify that he was indeed using the latest drivers from IBM

Also, I was able to find some other people complaining about the same issue.

One thing I asked my friend to do is to post some software RAID results.

If you have any helpful information, please post some comments.

Update 2007-03-10 12:58PM EST

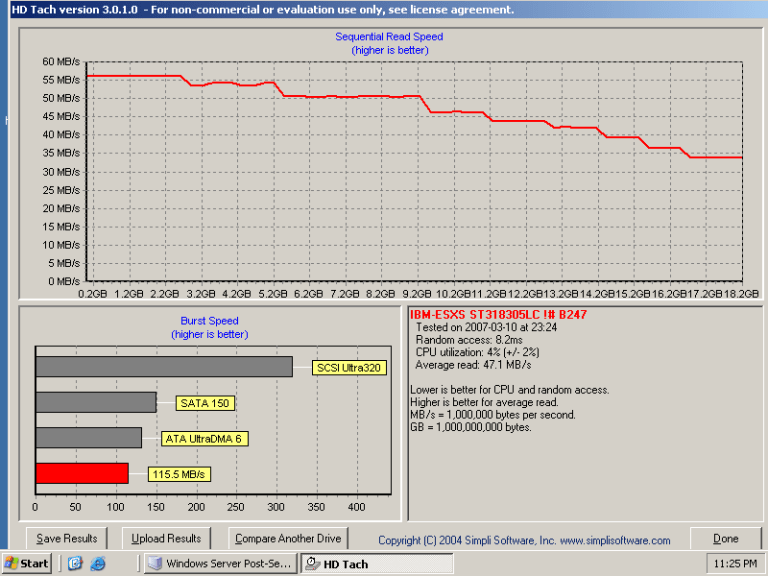

I had my friend perform some tests using his three 18GB disks with one disk for booting, and 2 as dynamic RAID 0.

Interestingly HD Tach seems to cut through the Dynamic Disks feature, and benchmark the devices individually causing single drive and RAID 0 to both benchmark like this:

Now that performance is a LOT more inline with what I expected to see. Good single drive starting performance, and decay over the drive surface.

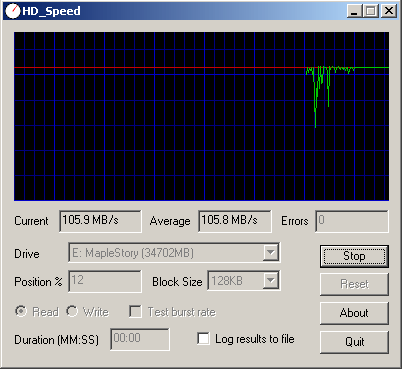

Okay, now that we know single drive is outperforming every 3 disk RAID he could setup using the RAID card, let’s see how dynamic disks fared using a benchmark tool which did not separate the dynamic disks and test them individually. To do this, we had to use HD_Speed:

Wow, isn’t that something? Software RAID performing about 3x as fast as his original RAID 0 performance using a RAID card.

My friend asked me if this basically meant hardware RAID sucked. I’d have to say no, but it does imply this card does. I’ll be getting my own card from a similar line (ServeRaid 6i) and I’ll benchmark that too. I hope I don’t get similar benchmarks, or I’ll have to sell it to someone else, and use software RAID. I’d rather do a 2 drive RAID 1, and a 4 drive RAID 5 using software RAID if I can’t get at least 150MB/sec sequential reads using the RAID card. I have a feeling I won’t be getting that level of performance with the ServeRaid based on the threads above, and my friend’s experience. Such a pity for IBM to release this flop of a product (I would invite anyone from IBM to set me straight, if I’m missing something).

Again, any comments, please post them.

Updates

2007-03-16 09:40AM EST Added IBM ServeRaid SCSI Performance Bottleneck – Part 2

2007-03-23 Added IBM ServeRaid SCSI Performance Bottleneck – Part 3